from GSA Today, v.10, October 2000

Evaluating Global Warming: A Post-1990s Perspective

David S. Gutzler, Department of Earth and Planetary Sciences, University of New Mexico, Albuquerque, NM 87131-1116, USA

ABSTRACT

Globally averaged surface-air temperature warmed approximately 0.5 °C during the twentieth century, and the rate of warming has accelerated considerably since about 1980. Proxy climate data suggest that current global temperatures are warmer than at any time in the last millennium. As this trend persists, the likelihood increases that the warming is due at least in part to anthropogenic inputs of atmospheric greenhouse gases. There is no debate over the measured increases in greenhouse gas concentrations, or the anthropogenic origin of these increases, or the direct radiative effect of increased greenhouse gas concentrations.

Public debate and policy development on global warming are stuck, however, in part because it remains exceedingly difficult to specifically attribute current global warming to increases in greenhouse gases, or to make confident predictions of the rate and spatial variability of future warming. Attribution and prediction of global warming both depend on large-scale modeling, and the complexities associated with simulating the climate system are so great that conclusive attribution and prediction will probably not be reached for some time. These uncertainties tend to overshadow the higher degree of certainty associated with observational evidence for global warming. The scientific community should acknowledge that the attribution and prediction problems will not be resolved to the satisfaction of policy makers in the near future, and should instead work toward establishing new paradigms for partnership with policy makers, with greater emphasis on observations of past and present climate change.

INTRODUCTION

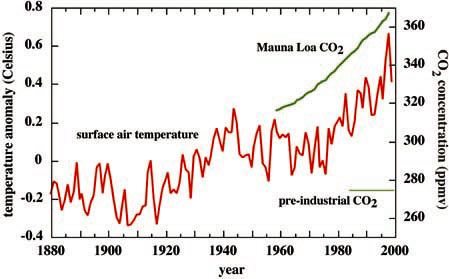

Earth's climate is warming. The Athabasca glacier shown in Figure 1 has steadily retreated during this century, as have many other glaciers throughout the world (Oerlemans, 1994). Figure 2 shows an instrumental record of globally averaged surface-air temperature since 1880, when sufficient thermometers were in service worldwide to make such an average meaningful. Temperature has increased since 1880 by approximately 0.5 °C. The warming trend has not been steady during the twentieth century; rapid warming during the first and last thirds of the century occurred before and after modest cooling in mid-century. The six warmest years of the 120-year record shown in Figure 2 all occurred in the 1990s. The warmest year is 1998; the year just ended, 1999, is the fifth warmest in the record.

Figure 2. Red curve shows annual global mean surface-air temperature for the period 1880-1999, obtained from U.S. National Climatic Data Center. The mean temperature for period of record has been removed. Thick green curve shows annual mean concentration of CO2

(ppmv) for the period 1959-1999, sampled at Mauna Loa Observatory, Hawaii (Keeling and

Whorf, 1999). The preindustrial CO2 concentration of about 275 ppmv is marked by lighter green line.

The temperature data in Figure 2 are subject to significant uncertainties, but these are relatively well constrained. There is no serious doubt that surface temperatures warmed somewhat in the twentieth century and that the warming trend accelerated near the end of the century. In addition to measurement uncertainties associated with particular thermometers, the network of thermometers over the world oceans is quite sparse, and numerous temperature records over land are taken at urban sites that may be subject to local microclimatic warming as cities grow (although the temperature data in Figure 2 have been processed in an attempt to remove this effect). Furthermore, globally averaged temperatures in the lower troposphere derived from satellites show less rapid warming since 1980 than the surface thermometer data. The difference between surface and satellite records has not been fully reconciled, but a recent comparative study confirms the reality of the twentieth century surface warming trend (National Research Council, 2000).

During this same period, the atmospheric concentration of carbon dioxide, methane, and several other important greenhouse gases has increased substantially (thick green curve, Fig. 2). There is no debate over the fact that greenhouse gas concentrations are increasing rapidly and the buildup is unequivocally anthropogenic (Intergovernmental Panel on Climate Change, 1995), through burning of fossil fuels and forests and expanding agriculture.

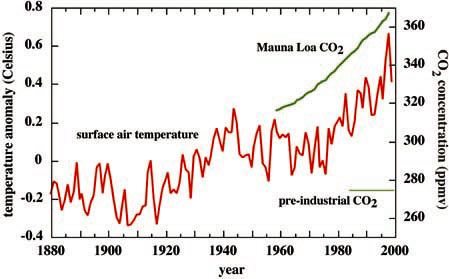

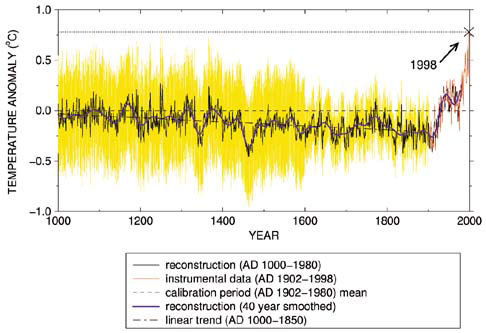

Although less than a degree of global warming in the twentieth century may seem small, this amount of warming is actually quite large compared to previous climate variability in the late Holocene. Figure 3 shows a time series of estimated Northern Hemisphere temperatures for the past 1000 years (Mann et al., 1999), which suggests that the observed warming in the twentieth century is both large and rapid, resulting in late twentieth century temperatures significantly warmer than at any time in the last millennium.

Figure 3. Reconstructed Northern Hemisphere temperatures since 1000 A.D. (Mann et al., 1999), referenced to 1902-1980 mean. Preinstrumental data are derived from a spatially weighted combination of twelve proxy climate indices based on tree ring and ice core data, calibrated against twentieth century instrumental record (red curve). Yellow shading shows an envelope of uncertainty (2 s) of annual temperature estimates. A modest cooling trend is suggested for most of the millennium, although uncertainties are large prior to 1400 A.D. Twentieth century warming is large, abrupt, and significant in comparison with variability exhibited in earlier centuries

What, if anything, people should do about greenhouse gas emissions in hopes of mitigating future global warming is one of the most contentious and important long-term environmental issues facing the world today. In this paper I present one view of why it has been so difficult to create science-based political consensus on global warming. To interact effectively with policy makers, the research community must place climate change into a context meaningful to the public. With this in mind I will present a short description of the policy framework for dealing with global warming and then present progressively more detailed snapshots of climatic conditions in the 1990s, the year 1999, and the boreal winter of 1999-2000.

Discussion of recent climate anomalies leads to a discussion of the difficult, and related, problems of attributing climate change to a particular cause and making quantitative predictions of future change. I argue that fundamental limitations in our ability to model the climate system in terms of forcing and response will preclude resolutions to these problems definitive enough to satisfy skeptical policy makers for years to come. The scientific community should refocus public debate on aspects of climate science that are more tangible and certain, based principally on observations. We should emphasize that model-based prediction is primarily a tool for describing a range of possible future climate scenarios, which cannot be predicted with certainty, and make better use of the observed climate record as the benchmark for these scenarios.

GLOBAL WARMING POLICY

To provide a framework for constructive scientific input to international climate change policy initiatives, the Intergovernmental Panel on Climate Change (IPCC) was created in 1988 by the World Meteorological Organization (WMO) and the United Nations Environmental Programme. The IPCC's First Assessment Report was released two years later (IPCC, 1990). Following this report, the U.N. Framework Convention on Climate Change, or FCCC, was negotiated in Rio de Janeiro in June 1992. It contains statements of principles regarding the potential impacts of anthropogenic climate change and the desire to stabilize greenhouse gas emissions, but does not include specific emissions quotas or legally binding enforcement provisions. Climate change in the FCCC is defined as change "attributed directly or indirectly to human activities," making the attribution problem a central component of global warming policy. The FCCC entered into force in March 1994 after having been ratified by 50 countries.

Emissions quotas designed to take steps toward achieving the goals of the FCCC were negotiated at the 3rd Conference of the FCCC Parties at Kyoto, Japan, in December 1997, after the release of the IPCC's Second Assessment Report (IPCC, 1995). However the Kyoto Protocol has not entered into force. As of January 2000, the protocol had been ratified by just 22 of the 84 parties that signed it. It will become legally binding only when at least 55 parties, including parties accounting for at least 55% of developed-country emissions, have ratified it. The most populous of the 22 ratifier nations is Uzbekistan.

CLIMATE CHANGE IN THE 1990s

The 1990 First Assessment Report was written and released shortly after the accelerated warming of the late twentieth century had commenced (Fig. 2). The report makes a strong distinction between general detection of a change in climate that seems to be significantly larger than some estimate of natural variability, and the attribution of such a climate change to a specific cause (IPCC, 1990, ch. 8). In 1990, it was possible to state with reasonable certainty that the climate had warmed during the twentieth century. Global mean temperatures then continued to rise in the years leading up to the Rio de Janeiro summit and the adoption of the FCCC in 1992. By 1995, when the Second Assessment Report was released, the detection issue was more firmly resolved than was possible five years earlier. The IPCC's Third Assessment Report, now in preparation and scheduled for release in April 2001, will surely contain even stronger statements on the significance of twentieth century climate change based on improved global estimates of Holocene climate variability such as shown in Figure 3.

The year 1999 marked the end of the warmest decade of an anomalously warm century (Fig. 2). The overall warmth of 1999 is particularly noteworthy considering the presence last year of La Ni-a conditions in the tropical Pacific. La Ni-a, the cold ocean phase of the El Ni-o-Southern Oscillation cycle, has been shown to have a cooling effect on globally averaged surface temperatures (Jones, 1988). Thus, the warm anomaly of 1999 occurred in spite of the effects of the El Ni-o-Southern Oscillation cycle on global temperatures, which almost certainly contributed to the record warm global temperatures of 1997-1998 (Karl et al., 2000) that occurred during an extreme El Ni-o event. The fact that the fifth warmest year on record occurred during the subsequent La Ni-a event adds to the evidence that global warmth in 1999-and by extension, the overall warming trend of the 1990s-was associated with some other factor.

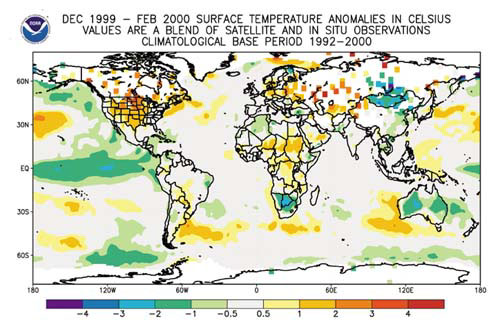

As if to end the 1990s with a climatic exclamation point, the most recent boreal winter (December 1999-February 2000) was remarkably warm across much of the globe (Fig. 4). Last winter was the warmest ever recorded across the 48 contiguous United States: There were no regions of the United States that exhibited significant negative temperature anomalies, and a large fraction of the midwest region experienced warm anomalies exceeding 2 °C. The subsequent boreal spring was the warmest ever recorded over the same area, and globally, this spring was the fifth warmest in the instrumental record (National Climate Data Center, 2000b).

Figure 4. Seasonal surface-air temperature anomalies for boreal winter December 1999-February 2000, obtained from the U.S. NOAA National Climatic Data Center

(NCDC, 2000a). Values are referenced to the 1992-2000 mean.

Much, but not all, of the planet was also warm last winter, including Europe, central Africa, and mid-latitude oceans in both hemispheres. However, temperatures across a large swath of the central equatorial Pacific were cooler than normal, corresponding to La Ni-a conditions. Across Australia, the austral summer of 1999-2000 was also somewhat cooler than normal. So this year, Australians may be inclined to dismiss global warming as a non-issue, despite twentieth century warming there consistent with the global average (IPCC, 1998). Mongolia and nearby regions of southern Siberia and northern China were cool and snowy. Individual short-term regional climate anomalies are part of the natural variability from which long-term climate signals must be separated. Yet these short-term, small-scale anomalies are what people feel and remember; the longer-term records (Figs. 2 and 3) allow us to put such short-term anomalies into the proper climatic context.

UNCERTAINTIES IN ATTRIBUTION AND PREDICTION OF CLIMATE CHANGE

The evidence for recent, rapid global warming is clear and seems very significant compared to current estimates of climate change in the Holocene. Thus, the debate over detection of climate change is drawing to a close. Nevertheless, it remains extraordinarily difficult to attribute the warming trend to any particular forcing function with sufficient certainty to satisfy policy makers. To address the attribution problem it is necessary to (1) understand the spatially varying response of the climate system to the forcing in question, i.e., define a particular signal; (2) estimate the expected spatial and temporal variability of the system in the absence of such forcing, i.e., define climate noise; and (3) demonstrate that observed climate change is sufficiently like the former and unlike the latter to confidently make an attribution, i.e., calculate the signal/noise ratio (Hasselmann, 1997). These are essentially the same steps required to make quantitative predictions of the forced response of the climate system to future increases in greenhouse gases.

A single variable such as global-mean temperature is not sufficient to differentiate a greenhouse-gas-induced climate change from other possible sources of variability. Large-scale numerical models provide the only means of estimating the answer to 1, above, and, in the absence of sufficient high-resolution, preindustrial climate data, the only practical way of assessing 2, above (IPCC, 1995, ch. 8; Hasselmann, 1997). Multivariate greenhouse fingerprints are derived by comparing patterns of variability in a numerical simulation in which greenhouse gas concentrations are kept fixed, thereby simulating natural variability, with another run of the same model in which greenhouse gases increase (IPCC, 1995, ch. 8; Hegerl et al., 1997; North and Stevens, 1998). Statistical tests are applied to determine the multivariate spatial pattern of climate anomalies-the fingerprint-that most clearly distinguishes variability in the two runs. Observed data are then examined for variations of the fingerprint pattern as evidence of greenhouse-gas-forced climate change.

Fingerprint techniques thus rely on large-scale models to characterize both the signal and the noise in the climate system. Although tremendous progress in coupled-ocean-atmosphere modeling has been achieved in recent decades, such models still contain numerous known limitations and deficiencies (IPCC, 1995, ch. 5; Ledley et al., 1999). These include the small-scale parameterizations of clouds, precipitation and turbulence in atmospheric models, the variability of temperature and currents in ocean models, characterization of energy exchanges among the atmosphere, ocean, and biosphere, and the sensitive nonlinear feedback processes that crucially affect the evolution of the coupled climate system (IPCC, 1995, ch. 4). The uncertainties of large-scale modeling are illustrated by the significant discrepancies in climates simulated by different models forced by exactly the same boundary conditions in controlled comparative runs (Barnett, 1999; Bell et al., 2000).

The First Assessment Report flatly stated that it was not possible to attribute twentieth century climate change to increases in greenhouse gases (IPCC, 1990, p. 254). Between 1990 and 1995, substantial model-based research was conducted to constrain the structure and magnitude of the greenhouse warming signal and the climate noise (steps 1 and 2 in the attribution process). As a result, the Second Assessment Report included a positive, but cautious, declaration of attribution, stating that, "The balance of evidence suggests a discernible human influence on global climate," (IPCC, 1995, p. 4; see also ch. 8).

The Second Assessment Report explains further that the greenhouse-gas-induced warming signal should emerge gradually from the climate noise over the coming decades, and that the signal/noise ratio would be largest on large spatial scales. Thus, there is an inherent mismatch between the large time and length scales most important for attribution of the causes of global warming and the smaller time and length scales important to the public. Five years of acrimonious debate (based largely on uncertainties in large-scale modeling) over the Second Assessment Report's attribution statement make it clear that its rigorous but uncertain conclusions are far from sufficient to help policy makers reach consensus on this issue.

Attribution studies have reached modestly more definitive conclusions since 1995 (Hegerl et al., 1997; North and Stevens, 1998; Knutson et al., 1999). Next year's Third Assessment Report will probably include a statement on attribution that is somewhat stronger than the second report, but it will likely not be sufficient to sway policy makers who are reluctant to accept the considerable economic challenges associated with CO2 emission limitations. The climate community's slowly increasing confidence in formally attributing observed recent temperature change to greenhouse gas forcing will remain controversial. Furthermore, attribution of regional-scale climate anomalies, such as the warm winter of 1999-2000 across North America, is simply not possible, yet it is just such smaller-scale variability that is of most concern to policy makers and the public.

Limitations in our ability to make definitive attribution of climate change go hand in hand with the difficulties in making quantitative predictions of climate change. These vary considerably, although at least modest global warming (at least as much as the twentieth century trend) is common to all the predictions considered by the IPCC in 1995. At regional spatial scales, the global warming signal is still a small component of interannual variability (cf. Fig. 4). Climatic variables other than temperature (especially precipitation) are still harder to model and predict with certainty. Climate prediction is an uncertain enterprise and will remain so.

A COUNTEREXAMPLE: THE MONTREAL PROTOCOL

It is instructive to compare the FCCC and its Kyoto Protocol with the development of policy in response to stratospheric ozone depletion. The international community acknowledged the importance of the ozone depletion problem (analogous to the FCCC) in the Vienna Convention in 1985. The goals of the Vienna Convention were addressed by the Montreal Protocol on Substances that Deplete the Ozone Layer (including chlorofluorocarbons, or CFCs, and other halons), which was negotiated in September 1987 and entered into force at the beginning of 1989 (Benedick, 1991) with subsequent strengthening amendments. The effort to restrain CFC production and promote more ozone-friendly substitutes for CFCs has been widely regarded as a success. Production of CFCs and halons is severely restricted. CFC concentrations have decreased in the troposphere and model predictions, based on confident attribution of the cause of stratospheric ozone depletion, suggest that the ozone hole should be repaired by late in the 21st Century (WMO, 1998).

In some important ways, the observed twentieth century increase in ozone-depleting substances is analogous to increases in greenhouse gases: The buildup is unequivocally anthropogenic, the gases in question have long atmospheric lifetimes so emissions are mixed worldwide and remain in the atmosphere for decades or longer, and anthropogenic emissions are generated internationally. The basic challenges of policy formulation aimed at mitigating ozone-depleting emissions are therefore also analogous. In particular, emission controls must be implemented internationally, causing tension between industrialized countries (the largest current emitters who, therefore, caused the current problem) and the lesser-developed countries, whose emissions are increasing rapidly but who want to avoid having their economic development options limited by new emissions controls.

However, there are several critical intrinsic differences in the temporal and spatial scales of the environmental problems associated with CFCs or greenhouse gases that make the global warming attribution and prediction problems much more difficult. (There are also large differences in the social and economic impacts of CFC versus CO2 emissions restrictions, but I will concentrate on climate effects here.) The most dramatic manifestation of ozone depletion, the Antarctic ozone hole, is an annual event that occurs at a fixed time of year at a known latitude and altitude. The ozone hole was first documented by Farman et al. (1985), after the adoption of the Vienna Convention but, perhaps significantly, before the Montreal Protocol was signed.

The Antarctic ozone hole was a nasty surprise that focused international attention on the stratospheric ozone layer. How ozone policy would have evolved had there been no ozone hole is actively debated (Benedick, 1991; Ungar, 1995; Betsill and Pielke, 1998), but several sharp distinctions between ozone depletion and global warming are clear. The ozone hole is observable every year. Policy makers and the public obtained direct experience with ozone hole observations and predictions, making them meaningful and believable, like day-to-day weather forecasts (Pielke et al., 1999). In contrast, global warming is a slow, continual, global-scale process that is much harder to define and separate from other climatic variability; it does not occur repeatedly and discretely. A warmer climate is not unequivocally bad for everyone, unlike a stratosphere with less ozone. It is difficult to convince policy makers and the public of the importance of hypothetical climate changes, and correspondingly much easier to explain that an event like the ozone hole seen previously might reoccur or amplify.

Furthermore, the predictable seasonal cycle of Antarctic ozone depletion allowed annual hypothesis testing to take place, leading to rapid progress on the attribution problem. In the late 1980s, predictions of the extent and magnitude of Antarctic ozone depletion were made each year based on testable theories for what caused it. Measurement campaigns were designed to test these theories. The annual repetition of the ozone hole allowed the implementation of a concentrated scientific campaign to both measure and model the processes involved, culminating in an extraordinarily definitive attribution: halocarbons are the culprit (WMO, 1994).

In contrast, there is just one global climate record for the past few centuries to use in assessing model performance, and the research community gets just one chance to predict the slow global change associated with greenhouse gas increases. The public cannot see this phenomenon occurring repeatedly. Global warming predictions made now will not be verified for decades. Even if the warming trends evident in Figures 2 and 3 continue into the twenty-first century, definitive attribution of these trends will be difficult and uncertainties will be large enough to provoke skepticism and debate. So long as policy development hinges on attribution and prediction of climate change, scientific uncertainties will seem large even if the climate continues to warm up.

RECOMMENDATIONS AND CONCLUSIONS

The IPCC will issue its Third Assessment Report next year. It will encapsulate some excellent science, but we should anticipate that it will not serve to move policy forward in a significant way. Two of the principal scientific sticking points-attribution and prediction of climate change-are related to inherent limitations in our ability to model the climate system with sufficient confidence to assuage skepticism and debate on those issues. Emphasizing the aspects of climate change that are most uncertain is a sound basis for scientists to generate research plans but a poor basis for us to interact with the public. We will not truly be able to attribute global warming to greenhouse gas increases until the climate has already warmed a great deal relative to pre-twentieth century temperatures. We cannot predict with certainty how much climate change will occur on local or regional scales decades in advance.

Constructive scientific input to global warming policy discussion should focus on the more tangible and certain aspects of climate science, grounded in observations. Our knowledge of climate change in the recent geological past is broad and deep, the means for refining curves such as Figure 3 still further are known, and our ability to put current change into late Holocene context is advanced relative to our ability to capture this variability in large-scale models. The past and present cannot serve as a complete guide to the future, especially since current levels and rates of change of greenhouse gases have no known analog in the past, but observations provide a much firmer foundation for public discussion than model-based research.

Emphasizing detection and monitoring of climate change would decrease public confusion over what is known versus what is uncertain. The policy-making community and the public should be guided to anticipate, and plan for, climate changes that cannot be predicted with certainty. Even if we cannot now say for sure how much of the warming in Figures 2 and 3 is due to increased CO2, we can say that recent change is certainly consistent with plausible expectations of greenhouse warming and that there is no indication that recent climate changes will reverse or abate. We should use observations to describe previous episodes of extreme climate anomalies that may become more common as the climate warms up. Policy guidance of this sort, grounded in observation augmented by a range of model-generated future scenarios, would lead to greater emphasis on adaptation to uncertain climate futures and "no regrets" economic policies (Pielke et al., 1999).

Waiting for the attribution and prediction problems to be solved, which is the current de facto policy, is itself an important policy decision and should be acknowledged as such. A more fruitful approach to scientific involvement in the policy process would begin by emphasizing improved descriptions of past climate change, and enhanced monitoring and detection of current climate change.

ACKNOWLEDGMENTS

Exceptionally constructive critical reviews by E. Barron, P. Fawcett, M.F. Miller, R. Pielke Jr., and M. Roy were extremely helpful. I thank M. Mann and T. Ross for Figures 3 and 4. My research at the University of New Mexico has been supported by the National Science Foundation.

REFERENCES CITED

Barnett, T.P., 1999, Comparison of near-surface air temperature variability in 11 coupled global climate models: Journal of Climate, v. 12, p. 511-518.

Bell, J., Duffy, P., Covey, C., Sloan, L., and the CMIP investigators, 2000, Comparison of temperature variability in observations and sixteen climate model simulations: Geophysical Research Letters, v. 27, p. 261-264.

Benedick, R., 1991, Ozone diplomacy: Cambridge, Harvard University Press, 613 p.

Betsill, M.M., and Pielke, R.A. Jr., 1998, Blurring the boundaries: Domestic and international ozone politics and lessons for climate change: International Environmental Affairs, v. 10, p. 147-172.

Farman, J.C., Gardiner, B.C., and Shanklin, J.D., 1985, Large losses of total ozone in Antarctica reveal seasonal ClOx/NOx interaction: Nature, v. 315, p. 207-210.

Hasselmann, K., 1997, Climate change: Are we seeing global warming?: Science, v. 276, p. 914-915.

Hegerl, G.C., Hasselmann, K., Cubash, U., Mitchell, J.F.B., Roeckner, E., Voss, R., and Waszkewitz, J., 1997, Multi-fingerprint detection and attribution analysis of greenhouse-gas, greenhouse-gas-plus-aerosol, and solar forced climate change: Climate Dynamics, v. 13, p. 613-634.

Intergovernmental Panel on Climate Change (IPCC), 1990, IPCC first assessment report: Scientific assessment of climate change-Report of Working Group I, Houghton, J.T., et al., eds.: Cambridge, Cambridge University Press, 365 p.

Intergovernmental Panel on Climate Change (IPCC), 1995, IPCC second assessment report: Climate change, 1995-The science of climate change, Houghton, J.T., et al., eds.: Cambridge, Cambridge University Press, 572 p.

Intergovernmental Panel on Climate Change (IPCC), 1998, The regional impacts of climate change-An assessment of vulnerability, Watson, R.T., et al., eds.: Cambridge, Cambridge University Press, 517 p.

Jones, P.D., 1988, The influence of ENSO on global temperatures: Climate Monitor, v. 17, p. 80-89.

Karl, T.R., Knight, R.W., and Baker, B., 2000, The record breaking global temperatures of 1997 and 1998: Evidence for an increase in the rate of global warming?: Geophysical Research Letters, v. 27, p. 719-722.

Keeling, C.D., and Whorf, T.P., 1999, Atmospheric CO2 records from sites in the SIO air sampling network, in Trends: A compendium of data on global change: Oak Ridge, Tennessee, USA, Carbon Dioxide Information Analysis Center, Oak Ridge National Laboratory, U.S. Department of Energy.

Knutson, T.R., Delworth, T.L., Dixon, K.W., and Stouffer, R.J., 1999, Model assessment of regional surface temperature trends (1949-1997): Journal of Geophysical Research, v. 104, p. 30,981-30,996.

Ledley, T.S., Sundquist, E.T., Schwartz, S.E., Hall, D.K., Fellows, J.D., and Killeen, T.L., 1999, Climate change and greenhouse gases: Eos (Transactions, American Geophysical Union), v. 80, p. 453 ff.

Mann, M.E., Bradley, R.S., and Hughes, M.K., 1999, Northern Hemisphere temperatures during the past millennium: Inferences, uncertainties, and limitations: Geophysical Research Letters, v. 26, p. 759-762.

National Climatic Data Center, 2000a, Climate of December 1999-February 2000: Global analysis, available at www.ncdc.noaa.gov/ol/climate/research/2000/win/global.html (August 2000).

National Climatic Data Center, 2000b, Climate of March 2000-May 2000: Global analysis, available at www.ncdc.noaa.gov/ol/climate/research/2000/spr/global.html (August 2000).

National Research Council, 2000, Reconciling observations of global temperature change: National Academy Press, 85 p.

North, G.R., and Stevens, M.J., 1998, Detecting climate signals in the surface temperature record: Journal of Climate, v. 11, p. 563-577.

Oerlemans, J., 1994, Quantifying global warming from the retreat of glaciers: Science, v. 264, p. 243-245.

Pielke, R.A. Jr., Sarewitz, D., Byerly, R. Jr., and Jamieson, D., 1999, Prediction in the earth sciences and environmental policy making: Eos (Transactions, American Geophysical Union), v. 80, p. 309 ff.

Ungar, S., 1995, Social scares and global warming: Beyond the Rio Convention: Society and Natural Resources, v. 8, p. 443-456.

World Meteorological Organization (WMO), 1994, Scientific assessment of ozone depletion: 1994: WMO Global Research and Monitoring Project Report No. 37.

World Meteorological Organization (WMO), 1998, Scientific assessment of ozone depletion: 1998: WMO Global Research and Monitoring Project Report No. 44.

Figure 2. Red curve shows annual global mean surface-air temperature for the period 1880-1999, obtained from U.S. National Climatic Data Center. The mean temperature for period of record has been removed. Thick green curve shows annual mean concentration of CO2 (ppmv) for the period 1959-1999, sampled at Mauna Loa Observatory, Hawaii (Keeling and Whorf, 1999). The preindustrial CO2 concentration of about 275 ppmv is marked by lighter green line.

Figure 3. Reconstructed Northern Hemisphere temperatures since 1000 A.D. (Mann et al., 1999), referenced to 1902-1980 mean. Preinstrumental data are derived from a spatially weighted combination of twelve proxy climate indices based on tree ring and ice core data, calibrated against twentieth century instrumental record (red curve). Yellow shading shows an envelope of uncertainty (2 s) of annual temperature estimates. A modest cooling trend is suggested for most of the millennium, although uncertainties are large prior to 1400 A.D. Twentieth century warming is large, abrupt, and significant in comparison with variability exhibited in earlier centuries

Figure 4. Seasonal surface-air temperature anomalies for boreal winter December 1999-February 2000, obtained from the U.S. NOAA National Climatic Data Center (NCDC, 2000a). Values are referenced to the 1992-2000 mean.